Disclaimer: I used the latest Perplexity and Claude AI models heavily as mentors to support my syntopical reading on this topic and to critique my thoughts for this blog post.

Introduction

Ever since the Industrial Revolution, the introduction of new technologies have typically caused the working class to fret over the potential automation of labor making them obsolete. In 1821, this sentiment was captured in the ‘machinery question’

“The opinion entertained by the laboring class, that the employment of machinery is frequently detrimental to their interests.” - David Ricardo, 1821

For the handloom weavers of 1810s, the answer to the question was clearly yes as power looms caused a collapse in wages and led to the rise of the Luddite movement.

However for the rest of us, historically, General Purpose Technologies (GPTs)—technologies that impact vast segments of the economy—such as the internal combustion engine, electricity, personal computers and the internet have not caused society-wide mass unemployment. Economists explain this with the “lump of labor” fallacy, which is the flawed assumption that there is a fixed amount of work to do and when automation takes some work, it results in human labor being worse off. This fallacy has been proved wrong many times as the introduction of new GPTs which has ended certain industries but created entire never before seen industries.

This post explores whether the unique characteristics General Purpose AI—in form of Large Language Models (LLMs)—make them qualitatively different from historical GPTs to warrant a revisiting of machinery question from a multidisciplinary lens.

Terminology: General purpose AI in this post is broad and defined as intelligent non-human agents that have the performing complex tasks to meet an objective across a broad range of cognitive domains in a supervised or autonomous fashion.

Based on my syntopical reading around this topic consisting of two dozen resource covering academic research papers/books/blog posts/policy documents, I have come to the conclusion that the answer to the machinery question for general purpose AI is most likely yes.

How General Purpose AI is different from Personal Computers or the Internet

Difference 1: General Purpose AI that reaches human level Cognitive performance is fundamentally labor displacing technology

This is not my hot take but the words of Mustafa Suleyman, one of the co-founders of Google DeepMind and the current head of AI at Microsoft.

“In the long term…we have to think very hard about how we integrate these tools, because left completely to the market and to their own devices, these are fundamentally labor replacing tools,” Suleyman told CNBC on Wednesday at the World Economic Forum’s annual gathering in Davos, Switzerland.

Techno-optimists that lead most of the major AI labors have similar sentiments towards the job displacement capability of AGI and advanced AI and are fully trying to promote this Brave New World future.

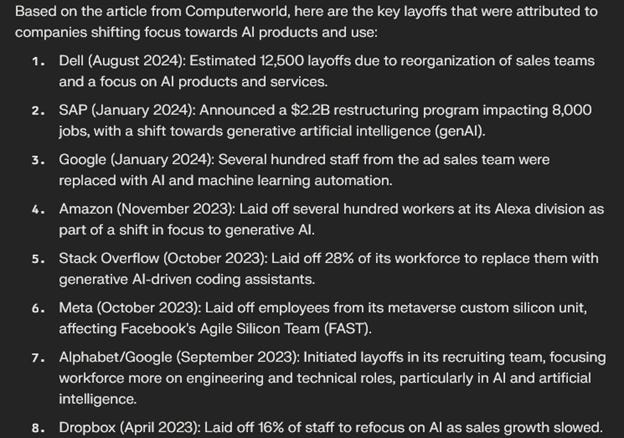

This ‘canary in the coal mine’ for this future is already here in two forms (1) direct substitute of human labor with general purpose AI software and (2) indirectly through displacement of entire departments of human labor by companies so that they can have the resources to pivot to AI-related business products and services.

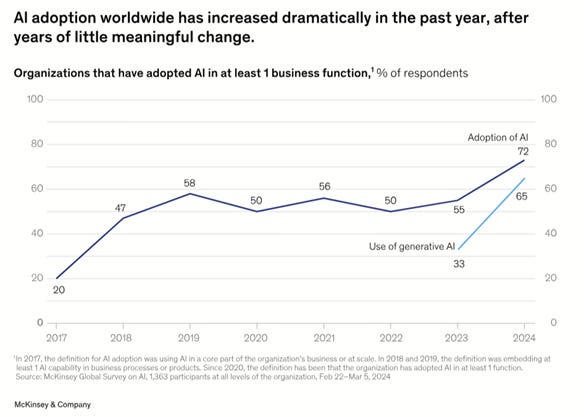

Difference 2: The rate of adoption of general purpose AI is a faster than PCs and Internet by potentially an order of magnitude

Difference 3: Explosive growth in AI model advancements since the initial public release of ChatGPT

This is not a big surprise to those that have been using ChatGPT since its initial launch and have seen the improved performance which each new model update.

The high level takeaway of the heat map is the significant improvement in the capability of these models since the public release of ChatGPT (Nov. 22’).

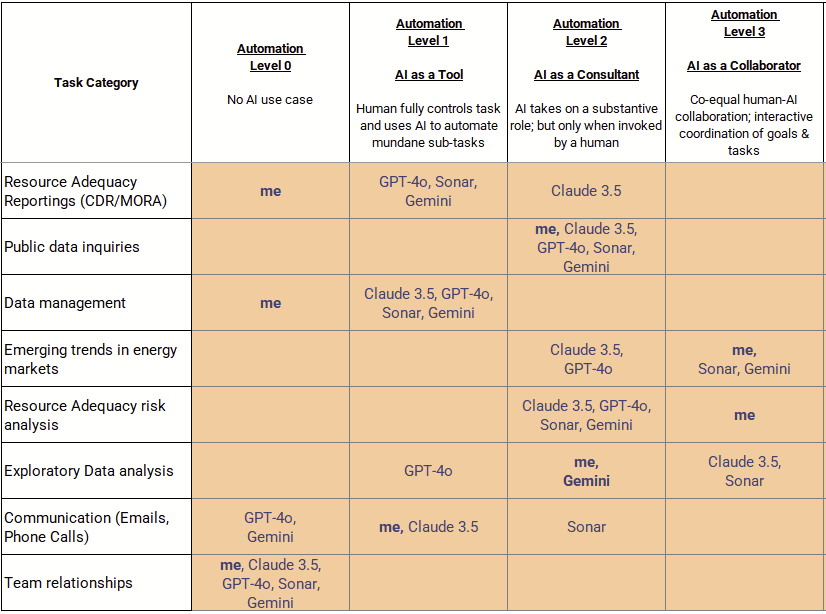

In the span of less than two years, ChatGPT has gone from a silly tool to a consultant/collaborator for the majority of my most valuable work tasks.

The opinion I share on this is that as these models get more and more advanced, the amount I am able to contribute dwindles down to zero. This is a sentiment shared by even vocal supporters of AI models like Ethan Mollick on his Substack (One Useful Thing).

Difference 4: “Today’s models are the stupidest they will ever be”

Sam Altman, CEO of OpenAI, has made the comment above twice in public this year, once at the World Economic Forum in Davos and another time on Bill Gates’ podcast Unconfuse Me.

Due to market incentives, AI labs are all keenly focused on reaching human level intelligence (Artificial General Intelligence, AGI) with their general purpose AI models. There are no leading indicators in terms of model performance to suggest advancements will not continue for future models.

Regarding when AGI forecasts, a 2023 survey of AI experts show the median forecast of 2047 as when we get to AGI. This was a 13 years shortening since the 2022 survey!

In both 2022 and 2023, respondents gave a wide range of predictions for how soon HLMI will be feasible (Figure 3). The aggregate 2023 forecast predicted a 50% chance of HLMI by 2047, down thirteen years from 2060 in the 2022 survey. For comparison, in the six years between the 2016 and 2022 surveys, the expected date moved only one year earlier, from 2061 to 2060.

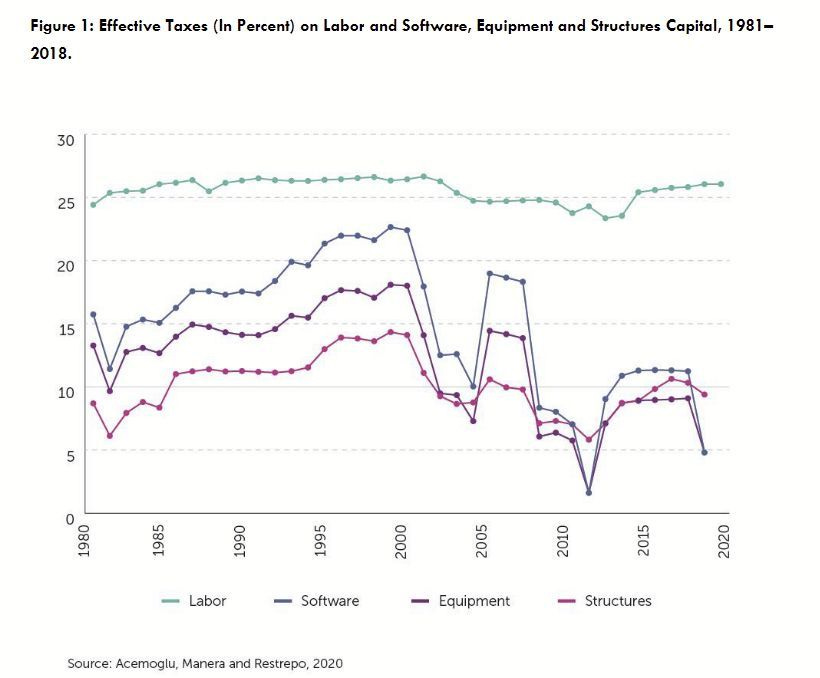

Difference 5: U.S. tax structure favors capital substitution of human labor

Case Study: Can the latest AI models replace me at my most valuable tasks?

Silicon Valley has had a very impressive track record of inventing products that have made life for the average knowledge worker more productive but utterly miserable, from Open Office layouts to Microsoft Outlook and more recently Microsoft Teams.

I took Google DeepMind’s levels to AGI framework and applied it to eight of my most valuable cognitive tasks in my current role to show the automation capability from current models.

The text “me” in the grid above show my judgements of the automation level of each task based on the latest AI models. The judgements of the AI models themselves are also included in the grid.

The AGI levels I did not include in this table from the Google DeepMind paper were

Level 4 (Expert): AI drives interaction; human provides guidance & feedback or performs sub tasks

Level 5 (Superhuman AI): Fully autonomous AI that can perform tasks better than humans.

General Purpose AI tools like Perplexity and Claude have already approached consultant and collaborator levels of automation in my current knowledge work job for my most valuable tasks.

John Maynard Keynes in his famous 1930 paper titled Economic Possibility for our Grandchildren defined “technological unemployment” as the following:

situation where the pace of automation exceeds the pace of new job creation,

Based on this definition I might be under the effects of technological unemployment as I am unable to find new valuable cognitive tasks quicker than the pace of automation from general purpose AI.

To answer the question of this case study, no the current AI models cannot replace me in any of my most valuable tasks, but the progression of these models show that might happen in the near future as we get closer and closer to AGI systems.

Objections and Cognitive Biases impacting human judgment on this topic

Behavioral Economics is one of my favorite subjects and in dedication of the late Daniel Kahneman, I wanted to highlight some of the cognitive biases at play around this topic for those against my position and also for me.

Computing Machinery and Intelligence, Alan Turing 1950 (the origin of the Turing Test)

The Heads in the Sand Objection: "The consequences of machines thinking would be too dreadful. Let us hope and believe that they cannot do so.”

Turing response: I do not think that this argument is sufficiently substantial to require refutation. Consolation would be more appropriate: perhaps this should be sought in the transmigration of souls.

Lady Lovelace’ Objection: Machines can only do what they are programmed to do and therefore cannot originate anything to be creative.

Turing’s response: A variant of Lady Lovelace's objection states that a machine can "never do anything really new." This may be parried for a moment with the saw, "There is nothing new under the sun." Who can be certain that "original work" that he has done was not simply the growth of the seed planted in him by teaching, or the effect of following well-known general principles. A better variant of the objection says that a machine can never "take us by surprise." This statement is a more direct challenge and can be met directly. Machines take me by surprise with great frequency.

Cognitive Biases (not inclusive of all biases)

Anchoring Bias: comparing everything to the first piece of information

Ex: GPT-4 is dumb, so I have nothing to worry about AI every replacing me

Anthropocentric Bias: assuming humans are the peak of intelligence,

General Purpose AI is alien intelligence and not trying to equal human intelligence…AlphaGo, Move 37

Planning Fallacy: our tendency to underestimate how long it will take to complete a task

in this case it is the opposite of how quickly AI tech is advancing

To capture both sides, here are a few cognitive biases that I might be falling for in this post from Claude AI.

I agree that I am probably being impacted by all of these at varying levels but even if we don’t experience the worst possible outcome of full human labor displacement, this thought experiment serves two important purposes:

It provides an insurance policy by using a precautionary approach to prepare for the most adverse scenarios.

By looking at the extreme cases we can learn a lot about intermediate cases. It is highly likely that some job displacement/depressed wages will occur due to general purpose AI adoption by companies.

Potential responses for knowledge workers

Fight Back (Loudly): Screen Actors Guild and Writers Guild of America 2023 Strike

The joint strikes of SAG and WGA last year regarding various issues related to Generative AI use was pioneering and set an important precedent regarding worker protections from AI related job loss.

Personally, I will fighting to preserve my autonomy and competence of my job from being sidelined by every advancing general purpose AI systems. These are some core principles of what makes work meaningful for people, so I will make sure I retain those by any means.

Fight Back (quietly): The Amish approach to technology adoption

The Amish approach to technology adoption is focused on a community focused, human-centered vision that allows one to mindfully adopt technology based on their alignment with one’s values and morals.

Demand Intelligent Augmentation instead of Artificial General Intelligence (AGI) and LLM Agents

Hindsight Bias makes us assume that historical events were the most likely than they actually were. In the 1950s, the long term goal of human level AI systems was in tension with human augmenting AI in the form of Intelligent Augmentation. AGI won that initial battle back in the 1950s but it is not too late to change paths.

Intelligent Augmentation (IA) instead of AGI would help us avoid the “Turing Trap” described by Stanford Economist Erik Brynjolfsson

“The distributive effects of AI depend on whether it is primarily used to augment human labor or automate it. When AI augments human capabilities, enabling people to do things they never could before, then humans and machines are complements. Complementarity implies that people remain indispensable for value creation and retain bargaining power in labor markets and in political decision-making. In contrast, when AI replicates and automates existing human capabilities, machines become better substitutes for human labor and workers lose economic and political bargaining power.”

How AI Fails Us paper from Harvard’s Justice, Health & Democracy Initiative does a great job at popping the bubble that AGI is somehow desired by knowledge workers or even the general public (outside of Silicon Valley).

Resistance is Futile: Learn and Adapt

The common response promoted on LinkedIn. This pathway will probably include the following objectives (from Claude 3 Opus AI)

Proactively build skills in areas that complement AI rather than compete with it. Focus on skills like critical thinking, emotional intelligence, and creativity.

Look for opportunities to work with AI tools in ways that enhance your productivity and results. Experiment with prompts and workflows to find the optimal human-AI collaboration.

Stay informed on the latest developments in AI to understand its true capabilities and limitations. Don't let unwarranted hype drive fears.

All that being said, I am unconvinced there are any rare and valuable cognitive tasks that I can do that general purpose AI will not eventually reach competence in.W

Neither did Daniel Kahneman

“And one of the recurrent issues, both in talks and in conversations, was whether AI can eventually do whatever people can do. And will there be anything that is reserved for human beings? And frankly, I don't see any reason to set limits on what AI can do. We have in our heads a wonderful computer. It's made of meat, but it's a computer. It's extremely noisy. It does parallel processing. It is extraordinarily efficient. But there is no magic there. And so it's very difficult to imagine that with sufficient data, there will remain things that only humans can do. “

hat is worse than being displaced once by general purpose AI?, being displaced twice or thrice by AI….

Other (interesting/pragmatic) options from Claude 3 Opus

Focus on Your Unique Value

Remember that AI is a tool to augment and assist human workers, not fully replace them. Focus on the unique skills, judgment and creativity you bring that AI cannot replicate.

AI still has significant limitations. Emphasize areas where human intelligence excels over AI, such as emotional intelligence, abstract thinking, and adapting to novel situations.

Reframe AI as a collaborator that can handle routine tasks, freeing you up to focus on higher-level work that delivers more value.

Set Boundaries with AI Use (related to Amish strategy above)

Using narrow AI models for specific tasks can provide assistance while keeping you in the driver's seat for higher-level work, as you noted. This can build confidence.

When using more advanced AI like Claude, give clear instructions to let you handle the key reasoning and decision making. Use AI to accelerate work rather than automate it entirely.

Regularly unplug from technology to reconnect with the physical world. This reminds you of human capabilities AI does not have.

Advocate for Responsible AI Practices

Look for employers who are transparent about their AI use and who provide ample opportunities for employee input and upskilling.

Support efforts to create legal and ethical frameworks around workplace AI. Collective action via unions or professional associations can create guardrails.

Encourage your organization to have clear guidelines around using AI responsibly, protecting worker privacy, and ensuring human oversight.

Conclusion

I would like to share a powerful quote from the famous historian Yuval Noah Harari that is extremely relevant to which potential futures we end up choosing when it comes to general purpose AI:

“History is not deterministic, and the future will be shaped by the choices we all make in the coming years.”

Thanks for reading!